Introduction

There has been a lot of things happening in the IT industry, from the cloudification of applications to the advent of mobile devices. The Renaissance of native languages like C++ and its new standard C++11 to new releases of .NET framework all of this to support this evolution of the industry. One thing is clear, though both technologies despite of their differences can be used and implemented to leverage one another.

This article might be considered as a follow up to the talk I gave at Tech-Ed Australia 2011 called “Maximize .NET with C++ for interop, performance and productivity” where I mentioned some of the benefits and scenarios for implementing native code to improve .NET programs. Back then C++ AMP was not available, but it is today and we will take it as valid example that can greatly benefit .NET code.

In order to get started, we will have to ask three questions (What? Why? How?) So what is Metaprogramming? Let us refer to the definition of “Metaprogramming” as appears on Wikipedia – “It is the writing of computer programs that write or manipulate other programs (or themselves) as their data, or that do part of the work at compile time that would otherwise be done at runtime. In some cases, this allows programmers to minimize the number of lines of code to express a solution (hence reducing development time), or it gives programs greater flexibility to efficiently handle new situations without recompilation”.

Why bother about it? Metaprogramming can help developers to write declarative code by implementing attributes, which can modify the behavior of a program at runtime. The .NET framework provides developers with features that ease implementing this functionality in an elegant and convenient way. At the same time, Metaprogramming can also help building software following an AOP (Aspect Oriented Programming) approach.

How can we enhance our .NET code with metaprogramming via Visual C++? The idea is very simple and it comprises an interception mechanism based on attributes which in turn invoke native code written in Visual C++ and then results are returned to .NET. As described in the article, we will use more efficiently the hardware available by running heavy math operations on the GPU in methods marked with a [GpuRunnable] attribute, this pre-processing occurs before even executing the method itself.

Running code on the GPU is one of the many advantages of implementing native code from .NET. There are multiple APIs which are not currently available to managed languages, and they can also be easily implemented with this approach, but this does not only apply to native components because the main idea is to enhance our code by implementing pluggable components that can improve our application at runtime and since they are late bound, they could be easily upgraded or replaced without recompiling the entire application.

This article is organized in three main sections, which are:

-

· Part I: Overview of the interception mechanism and GPURunnable attribute (Managed)

-

· Part II: Efficiently using the hardware available by leveraging the GPGPU (Native)

-

· Part III: Putting all the pieces together

Part I: Overview of the interception mechanism and GPURunnable attribute

Metaprogramming can be achieved at least via three different approaches which are scripting, reflection and code generation; being reflection the one used in this article. One of the cool features in managed and scripting languages is the ability to examine via type introspection and modify the structure and behavior of an object at runtime. This is feature is available in Java, ML, Haskell, C# and Scala. The nearest feature in C++ to do something similar is RTTI (Run-Time Type Information).

Some developers might not be aware of the existing metaprogramming capabilities in .NET, even though when they use it on a daily basis. Two good examples of this are code-generation and attributes. Attributes provide a mechanism for defining declarative tags, which you can place on certain entities in your source code to specify additional information or metadata, but most importantly to customize behaviors or extract organizational information of the target at design, compile, or runtime.

The paradigm of attributed programming first appeared in the Interface Definition Language (IDL) of COM interfaces and then Microsoft extended the concept to Transaction Server (MTS) and used it heavily in COM+. Its main purpose is to provide two or more programs to interoperate between different contexts.

As described earlier, code execution on the GPGPU is possible due to implementing an interception mechanism that relies on a couple of attributes and a class as described below:

- Enhanceable attribute (inherits from ContextAttribute class): Context attributes are more than just metadata—they are derived from a class called ContextAttribute, which means that they have the responsibility of determining whether a candidate context has the required component services. The context attribute also has the responsibility of creating the sink object that provides the component service. The runtime calls the attribute and passes the next sink in the chain so that the attribute can create its own sink object and insert it into the chain.

- GpuRunnable attribute (inherits from Attribute class – Implements IGpuRunnable interface): This attribute contains information about the GpuProcessor to use. The GpuProcessor is a Win32 DLL written in C++ that uses C++ AMP (This is described in the following section). This DLL is loaded at runtime by the interception mechanism which uses the metadata information to determine function to call as well as attributes to be passed.

- ContextObject class (inherits from ContextBoundObject class): It is target of the interception because it is marked with [Enhanceable]. Its methods can be enhanced by running on the GPGPU before running on the CPU. These methods are marked with [GpuRunnable].

The interception mechanism described here uses .NET Contexts which have COM+ as precursor (later known as .NET component services). A context is an execution environment that contains one or more objects that require the same component services. When a context-bound object is created, the runtime checks the context where the activation request was made.

If this context provides the services required by the new object, then the object is created in the creator’s context. If the creator’s context is not suitable, the runtime creates a new context. In general, any object accessing a context-bound object does so through a proxy object and when the proxy is created (the context-bound object is marshaled to the other context), the runtime sets up a chain of “sink” objects that add the component services of the context to a method invocation. When an object is called across a context boundary, the method invocation is converted into a message object that is passed through the chain of sinks.

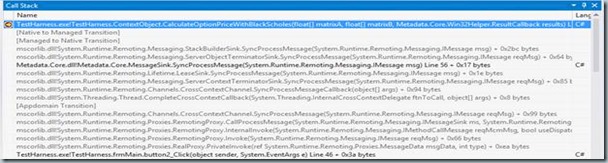

That explains why our demo application’s stack trace shows a few calls to Runtime.Remoting, AppDomain Transition and switching context (from managed to native when calling the GpuProcessor and back to native to managed when the callback is called from the Win32 DLL, this is done to return the results to the caller).

Figure 1 – Demo Application Stack Trace

Part II: Efficiently using the hardware available by leveraging the GPGPU

Nowadays is a great time to become a software developer, and I can honestly say that if I had to learn to code from scratch, I would definitely learn C++ first. We have a new standard C++11 and the ISO committee is working already on the new standard C++14, but that is not the only cool thing about it, everything is moving towards the cloud and mobile devices, so once again high performance and low latency systems have always been C++ specialty.

Some other good reasons to build C++ applications are direct access to memory and hardware, and that is precisely the purpose of this article on how to efficiently use the hardware we have available. As developers we tend to think that everything has to run on the CPU, but that is not entirely true because Microsoft introduced C++ AMP for over a year now.

At this moment you will be wondering one of these questions: Do I need to learn a different language? Do I need to buy a special SDK or libraries? Will my C++ AMP code run on different hardware? And answering those questions: No, you do not have to learn anything because it is C++, C++ AMP is a library (VCAMP110D.DLL – Debug) and it has a dependency on the MS C Runtime libraries and yes, it will potentially run on different hardware as long as your video card driver supports it.

There are a few resources on C++ AMP available online and there is one excellent book written by fellow MVP Kate Gregory called “C++ AMP: Accelerated Massive Parallelism with Microsoft® Visual C++®”

The C++ AMP code demonstrated here are matrix multiplication and calculation of options pricing (Black-Scholes Model). Any other heavy arithmetic operation which involves matrices is a potential candidate for parallelization, therefore can be executed on the GPGPU while the CPU does something else. If you are a derivative software developer you might find this useful then.

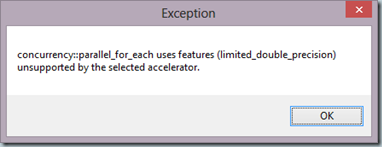

One thing to notice is that not all GPGPUs support operation with doubles and if you do then you might get the exception below

Figure 2 – Limited_Double_Precision not supported

The GpuProcessor is a Win32 DLL that contains the CGPUProcessor which is a C++ class that is exported as a C Library to work around the name mangling. The function prototype for CalculateOptionPriceWithBlackScholesInGpu is shown below

void CalculateOptionPriceWithBlackScholesInGpu(float* matrixA, float* matrixB, int matrixALength, int matrixBLength, float rate,

float volatility, float maturity, const void* returnSink)

As you can see, we pass a couple of float arrays and their length, the next three parameters (rate, volatility and maturity) are retrieved from attribute and then pass into the function, in order to notify .NET (CPU) that calculation has completed on the GPU we call a callback (const void* returnSink) passed in as a parameter too that is cast to

typedef void (*ptrClrDelegate) (void* results, int itemCount, int operation);

CalculateOptionPriceWithBlackScholesInGpu method

void CGPUProcessor::CalculateOptionPriceWithBlackScholes(const void* returnSink, std::vector<float>& vC,

const std::vector<float>& vA,

const std::vector<float>& vB,

const float& rate, const float& volatility,

const float& maturity) {

if (vA.size() == vB.size()) {

auto arrayLength = vA.size();

array_view<float, 1> c(arrayLength, vC);

array_view<const float, 1> a(arrayLength, vA);

array_view<const float, 1> b(arrayLength, vB);

c.discard_data();

auto normalDist = [&](const float& value) restrict(amp) ->

float {return (1.0f / fast_math::sqrt(2.0f * (float) PI)) *

fast_math::exp(-0.5f * value * value);};

parallel_for_each(concurrency::extent<1>(arrayLength), [=](index<1> idx) restrict(amp) {

auto s = a(idx) * fast_math::exp(-rate * b(idx));

auto d1 = b(idx) * a(idx) * fast_math::exp(-rate * b(idx));

c[idx] = d1 / s;

});

c.synchronize();

((ptrClrDelegate) returnSink)(vC.data(), vC.size(),

MathOperation::CalculateOptionPriceWithBlackScholes);

}

}

The above code snippet runs on the GPU, it takes the input data in the form of arrays (converted to STL’s vectors) which then were used to create three array_view that allow us to synchronize any modifications made to the array_view back to its source data. I guess it does something similar to what OpenMP does, because I do not know the internals of C++ AMP.

Part III: Putting all the pieces together

So far we have described the managed and native components of our solution, now let us see how all the pieces work together, the code snippet below shows how the GpuRunnable attribute is used, as you can see the method body all it does is the retrieval of the results from the AppDomain (stored in the button_click event handler once the GpuProcessor has finished running code on the GPGPU).

Intercepted method (Delegated to GpuProcessor)

[Enhanceable]

public class ContextObject : ContextBoundObject {

public event MultiplicationCompleteDelegate OnGpuOperationComplete;

[GpuRunnable(Consts.DefaultGpuProcessor, Consts.CalculateOptionPriceMethod,

"Rate=0.5;Volatility=10.0;Maturity=10.0")]

public void CalculateOptionPriceWithBlackScholes(float[] matrixA, float[] matrixB,

Win32Helper.ResultCallback results) {

var res = AppDomain.CurrentDomain.GetData(Operation.OptionPriceCallBlackScholes.ToString());

}

}

Button’s click event handler

private void button2_Click(object sender, EventArgs e) {

var myContextObject = new ContextObject();

var randomNumber = new Random(int.Parse(Guid.NewGuid().ToString().Substring(0, 8),

NumberStyles.HexNumber));

var matrixA = Enumerable.Range(1, Consts.MaxItemCount).Select(x => (float)randomNumber

.Next(1, 500)).ToArray();

var matrixB = Enumerable.Range(1, Consts.MaxItemCount).Select(x => (float)randomNumber

.Next(1, 100)).ToArray();

myContextObject.OnGpuOperationComplete += ((object o, float[] res, int operation) =>

AppDomain.CurrentDomain.SetData(((Operation)operation).ToString(),

res));

myContextObject.CalculateOptionPriceWithBlackScholes(matrixA, matrixB, myContextObject.GetResultsFromGpu);

}

The way AppDomain.SetData and AppDomain.GetData work is similar to the way TLS (Thread Local Storage) does with TlsAlloc, TlsSetValue and TlsGetValue. The main difference is that it uses a Dictionary<string, object[]> and it does not apply to any specific thread but to the AppDomain as a whole.

Figure 3 – AppDomain’s LocalStore

Method responsible for dynamically loading/executing GpuProcessor

public void LoadNativeModuleAndRun(IGpuRunnable gpuRunnable, IMethodCallMessage message) {

if (!File.Exists(gpuRunnable.GpuProcessor))

throw new FileNotFoundException(Consts.GpuProcessorNotFound,gpuRunnable.GpuProcessor);

var hModule = Win32Helper.LoadLibrary(gpuRunnable.GpuProcessor);

var isMultiplication = string.IsNullOrEmpty(gpuRunnable.CalculationParameters);

if (hModule != IntPtr.Zero) {

var hProcAddress = Win32Helper.GetProcAddress(hModule, gpuRunnable.GpuProcessorMethod);

if (hProcAddress != IntPtr.Zero) {

if (isMultiplication) {

var functor = Marshal.GetDelegateForFunctionPointer(hProcAddress,

typeof(Win32Helper.GpuMultiplicationProcessorCallback)) as

Win32Helper.GpuMultiplicationProcessorCallback;

var matrixA = (float[])message.InArgs[0];

var matrixB = (float[])message.InArgs[1];

var notificationCallback = (Win32Helper.ResultCallback)message.InArgs[2];

functor(matrixA, matrixB, matrixA.Length, matrixB.Length, Consts.ExtentRowSizeOne,

Consts.ExtentColumnSizeTwo, Consts.ExtentColumnSizeOne,

Marshal.GetFunctionPointerForDelegate(notificationCallback));

} else {

var parameters = new ScholesCalculationParameters();

var tokenized = gpuRunnable.CalculationParameters.Split(';').ToList();

var paramType = parameters.GetType();

var flag = BindingFlags.SetProperty | BindingFlags.GetProperty |

BindingFlags.Instance | BindingFlags.Public;

var functor = Marshal.GetDelegateForFunctionPointer(hProcAddress,

typeof(Win32Helper.GpuProcessorCalculateOptionPriceCallback)) as

Win32Helper.GpuProcessorCalculateOptionPriceCallback;

var matrixA = (float[])message.InArgs[0];

var matrixB = (float[])message.InArgs[1];

var notificationCallback = (Win32Helper.ResultCallback)message.InArgs[2];

tokenized.ForEach(x => {

var prop = paramType.GetProperty(x.Substring(0, x.IndexOf('=')), flag);

prop.SetValue(parameters, float.Parse(x.Substring(x.IndexOf('=') + 1)));

});

functor(matrixA, matrixB, matrixA.Length, matrixB.Length, parameters.Rate,

parameters.Volatility, parameters.Maturity,

Marshal.GetFunctionPointerForDelegate(notificationCallback));

}

}

Win32Helper.FreeLibrary(hModule);

}

}

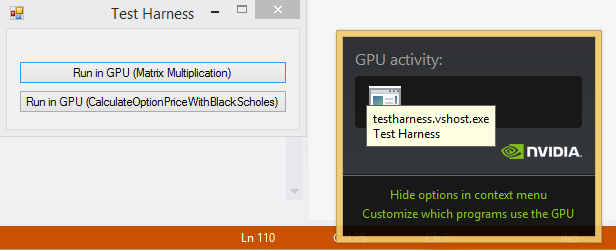

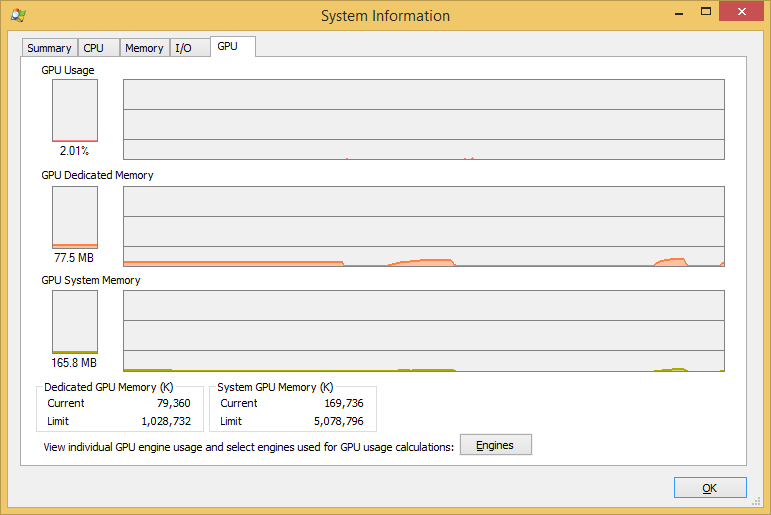

We can see our GPU’s activity after running our test harness application either in NVidia’s notification tool or Process Explorer (both are shown below)

Code execution on the GPGPU can run up to 100 times faster than on the CPU, but beware not every code can benefit from GPGPU parallelization. This article intended to demonstrate an alternative to enhancing .NET code via Visual C++ with metaprogramming. The same principle applies for managed code or another scenario, let us suppose that you want to record a sequence of steps (methods) as well as their parameters and then replay the same steps in the same order (In case, something goes wrong the first time), well you could intercept the method call before even executing it.

This approach also opens another opportunity for AOP (Aspect Oriented Programming) but it is not in the scope of this article. Please find source code here

Regards,

Angel

![clip_image002[6] clip_image002[6]](http://www.angelhernandezm.com/wp-content/uploads/2013/10/clip_image0026_thumb1.jpg)

0 thoughts on “Enhancing .NET code via Metaprogramming with Visual C++”